As a software company grows, so too does its testing requirements. Each new developer needs the capability to validate their work. The problem is that resources cannot easily scale with developers. If it were to take an hour to do validation, five developers cannot wait five hours. Further, in the cloud the most typical cost model is pay-as-you-go, which means you are penalized for any wasted cycles where a node is not doing work. In this blog, I will share some of the steps I have taken toward scaling up our resource pool as we have grown, allowing us to maximize efficiency and utilization while minimizing idle time and cost.

Back in 2017 when we were on the previous (legacy) generation of RapidResponse, we were working with a small set of virtual machines/nodes, and at the time it ran most of the tests for the backend server in a few hours.

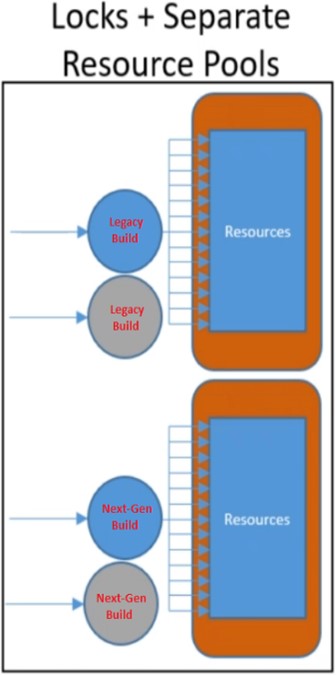

As we moved on through development with the next/current generation of RapidResponse, we needed to start running tests on it as well. But as we were not dropping support for the previous generation at the time, we still needed those nodes to run the older tests. To start off we introduced a new set of nodes (+50% increase) to handle growing development on the next-gen product. As development continued, we added another set to match previous-gen’s resource pool, now at double our initial VM (virtual machine) count.

From here the problem with idle nodes begins.

What code are we testing?

DevOps is the team that compiles official builds for the company to test, and they did not have additional resources. This means that they would compile a prev-gen build, and then later alternate to compiling a next-gen build. Having two separate resource pools meant we would get one build at a time and only 50% of our total resources would work on it, while the other 50% was idle waiting for the alternate build type. If half of our compute resources were idle at any given time, then we might as well only have half the nodes.

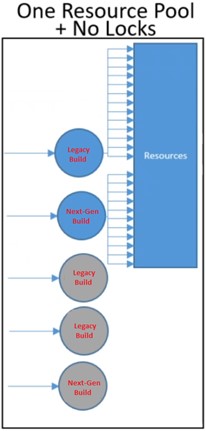

This brought on the first generation of resource pool changes in the AFT (Automated Functional Tests) pipeline. Productivity can be raised by having idle resources contribute to the ongoing tests. The two sets of nodes were combined into a single pool. This led to each build completing at twice the speed. By the time DevOps had finished switching from compiling prev-gen to compiling next-gen, the tests for older product had finished and all the nodes could participate with newer product testing instead of half the nodes going idle during this time.

This brought on the first generation of resource pool changes in the AFT (Automated Functional Tests) pipeline. Productivity can be raised by having idle resources contribute to the ongoing tests. The two sets of nodes were combined into a single pool. This led to each build completing at twice the speed. By the time DevOps had finished switching from compiling prev-gen to compiling next-gen, the tests for older product had finished and all the nodes could participate with newer product testing instead of half the nodes going idle during this time.

The timing in which the tests finished is also important. If a single test takes 20 minutes longer than the rest, and the results for a build are not released until all tests are complete, then the single test should be put on its own VM in an effort to complete at the same time as the rest of the tests. For this, existing test data is used to determine how long things take, and those durations then drive how tests are distributed across the nodes.

This initially worked very well, but another problem appeared.

Tests can fail dramatically

In some cases, we might produce a server hang during testing. To the pipeline, this means one node still registers as though testing has not completed, and therefore it would hold the entire pipeline and prevent the rest of the nodes from going and working on the next build. The underlying issue was how locks were used to control how the nodes picked up work. We use Jenkins to manage our testing pipeline and the total set of tests is broken down into individual groups of work we call “jobs.” Each job for any given build must be finished before picking up any jobs for the next build. This meant that the test pipeline would not release any resource until all testing was completed to prevent builds from interrupting each other.

This lock prevented all other nodes from picking up new work if one node was still running tests, whether those tests were taking longer to complete than normal, or if they were completely stuck in a deadlock.

The solution was to have N individual locks, one around each node. A build could come in and would make a request for the nodes it needed for testing, and nodes that were not locked would immediately get to work. If one of those nodes took longer, the next build could still request resources, and the remaining worker nodes would respond and pick up work, no longer waiting for the one that was stuck. Work could then be assigned as soon as it came in and nodes would not wait for other nodes to complete, this led to having 100% resource utilization (the removal of all idle time) when there was work to be done.

The solution was to have N individual locks, one around each node. A build could come in and would make a request for the nodes it needed for testing, and nodes that were not locked would immediately get to work. If one of those nodes took longer, the next build could still request resources, and the remaining worker nodes would respond and pick up work, no longer waiting for the one that was stuck. Work could then be assigned as soon as it came in and nodes would not wait for other nodes to complete, this led to having 100% resource utilization (the removal of all idle time) when there was work to be done.

Over time, Kinaxis began to adapt an internal CI/CD (continuous-integration/continuous-delivery) model. We started to run tests against our product every time someone made a change, and we doubled our node count (now at 4x the original number) to handle this increased workload. However, complexity was introduced here. Compiling builds to be tested on each commit meant that builds between next-gen and legacy were no longer 1-to-1, we might have 10 legacy builds and two next-gen builds depending on how quickly changes were being made. Since resources were now greedy, they might pick up five legacy builds in the order they came in before responding to one of the next-gen builds, keeping next-gen developers waiting for hours.

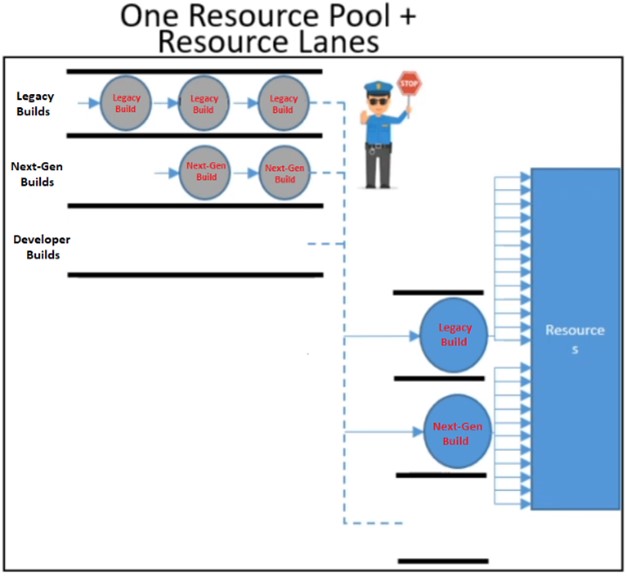

This was unacceptable in terms of productivity. Developers need to know if their code changes work, so how do we make sure that the resources are not ignoring next-gen builds all day while they do multiple legacy builds? This problem was introduced by combining the resource pools into one to avoid idle time. But just because we have one pool of resources does not mean there can only be one way to reach them, we just need a scheduler to ensure fair resource usage.

Resource lanes

To solve this, I introduced the concept of “resource lanes.” Each lane corresponds to a type of build that comes in, for now we will have two lanes, one for next-gen builds and one for legacy builds. If a build enters an empty lane, then it is immediately allowed to queue jobs for resources. If no other builds are queued, then the resources will immediately start working on those jobs. If another build enters the same lane, it must wait until the preceding build finishes before it is allowed to queue for resources. This means that if five legacy builds arrive in an empty queue, the first one will start executing but the rest will run sequentially one after another. If a next-gen build arrives, it will immediately request resources because its lane is empty, which means workers will run those tests before the next legacy build in the legacy resource lane queue. This gives us control over resource starvation over specific lanes if we were to otherwise use a FIFO queue.

When we previously had a single resource lane for jobs to queue in it used to take us three hours to test two builds. With resource lanes and no idle time, we were able to increase efficiency by 150%, and more nodes further increase the overall throughput of the system. This is because in the earlier implementation the total throughput was determined by the longest piece of work, but now slower processing for testing in one lane does not affect the throughput of other lanes. The focus of the improvements here was the reduction of idle time, making sure that any time a node was idle it could find new work to do.

When we previously had a single resource lane for jobs to queue in it used to take us three hours to test two builds. With resource lanes and no idle time, we were able to increase efficiency by 150%, and more nodes further increase the overall throughput of the system. This is because in the earlier implementation the total throughput was determined by the longest piece of work, but now slower processing for testing in one lane does not affect the throughput of other lanes. The focus of the improvements here was the reduction of idle time, making sure that any time a node was idle it could find new work to do.

This scales up extremely well with additional resources. In the older lock-bound pipeline, the waste of new nodes will exceed the gain because the throughput is limited by the slowest piece of work. In the newer “lockless” pipeline we could add 1000 nodes and the total throughput of the pipeline would keep increasing because the efficiency ceiling is not determined by the number of nodes, but rather by the setup time of each job. If enough jobs are triggered that we spend more time doing setup for the tests than running the tests themselves, then we are no longer gaining efficiency as we are adding more work than we are saving.

This solution also supports the easy addition of more resources when we find that our work queue is growing faster than we can consume it. At any time, we can spin up cloud resources and add them to our worker pool, they can assist in running tests until we shrink the queue enough, and then those workers can be deallocated without any disruption to the pipeline. In the cloud, if there is no more work to do, the nodes can shut down after executing to save money.

With this design, the throughput of the test pipeline was so efficient that we were able to support developer feature-branch builds to be tested on these resources. As our tests and development team continued to expand, we continued to add more resources. We are now sitting on a pool of nodes more than 20x larger than 2017 which act as our primary resource pool, and these nodes are shared across eight resource lanes representing major categorizations of our builds (Official Release, Personal Builds, Legacy Builds, Next-gen Builds, Stress Testing, etc).

Several other problems arose during this growth, like the effect of the increased network traffic and overhead of the higher node count. In my next post I will get into some of the problems from this growth and some of the solutions that were put in place.